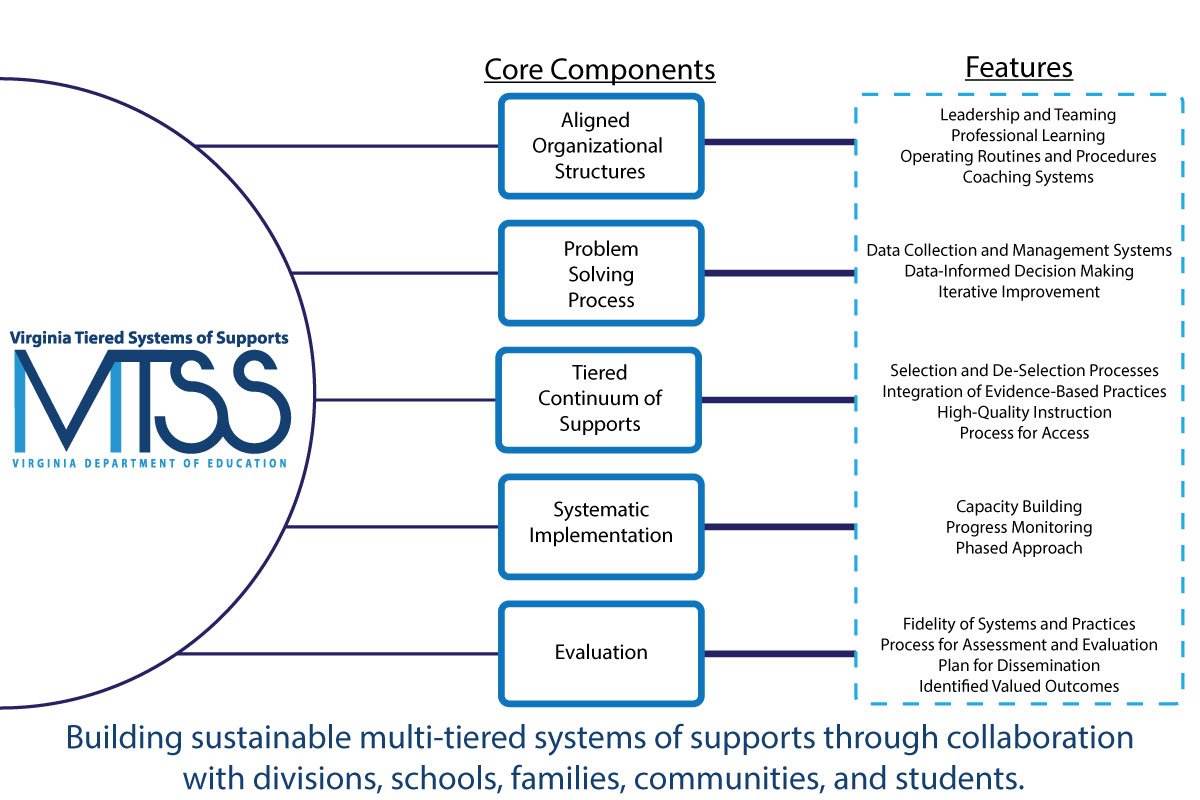

Virginia’s MTSS Core Components & Features 2.0

We are thrilled to announce the launch of our updated core components and their accompanying features. These core components serve as our anchor for the effective support and implementation of an MTSS framework.

In collaboration with the VDOE, VTSS-RIC, and TTAC partners, we have spent the previous year carefully assessing and evaluating our past support to the field and addressing the new and evolving needs of the school divisions receiving support from the VTSS-RIC. The updated core components build upon our existing MTSS work, creating a solid MTSS foundation for divisions new to implementation while enhancing the MTSS work of our existing division partners. As our collective organization has evolved in our approach to supporting the field, we have revised our MTSS core components to better align with our collective contextual needs and the growth of research and supporting evidence on MTSS implementation.

Our core components and features are available to share in this one-pager graphic. The full text of our components and features is available below as well as in a downloadable Word document.

Aligned Organizational Structures: The intentional organization of a system’s processes and components that work in concert to support a common vision, mission, and set of identified goals.

- Leadership and Teaming: Implementation of evidence-based practices and systems are guided, coordinated, and administered by a local team comprised of representation from leadership, stakeholders, implementers, consumers, and content experts. This team is responsible for ensuring high implementation fidelity, communication, management of resources, and data-based decision-making (PBIS Blueprint).

- Professional Learning: Activities that are data-driven, content-focused, and aligned to the instructional and growth needs of all students and staff. Professional learning activities should be collaborative, purposeful, planned, sustained over time, job-embedded, classroom-focused, and aligned with VDOE's mission and vision (Michigan MTSS Practice Profile).

- Operating Routines and Procedures: Detailed step-by-step explanations of procedures and standards necessary for districts and schools to be successful (e.g., communication, planning, funding, and policy).

- Coaching Systems: Coaching for systems change involves collaborating with school and division leaders to develop, implement, and sustain an MTSS framework that emphasizes educational environments that improve student outcomes through leadership, facilitating resource allocation, fidelity of evidence-based practices, and addressing large-scale reform and whole-school organizational improvement (Brown et al., 2005; Fixsen et al., 2005; Fullan & Knight, 2011; March et al., 2016; Neufeld & Roper, 2003).

Problem-Solving Process: A process for collecting, analyzing, and evaluating data to inform educational decisions about instruction, intervention, implementation, resource allocation, policy development, and movement within a multi-tiered system.

- Data Collection and Management Structures: Includes the collection, organization, storage, visual display, and reporting of data. Data systems (e.g., data collection tools and applications) promote consistent data collection and reflect a range of settings and stakeholders (e.g., community data, student and family perceptions).

- Data-Informed Decision-Making: A consistently used process for analyzing and evaluating data to inform educational decisions about instruction, intervention, resource allocation, professional learning, coaching, policy development, and movement within a multi-level system to support sustainable systemic improvement and whole-child learner outcomes.

- Iterative Improvement: The use of a procedure to continuously refine and evaluate practices/initiatives through regular cycles of data review and iterative action planning, which results in a closer approximation to the desired outcome.

Tiered Continuum of Supports: A three-tiered continuum of integrated academic, behavioral, and social-emotional instruction, intervention, and support that is evidence-based and responsive to the unique needs of each learner.

- Selection and De-selection Processes: The explicit act of evaluating whether to select, modify, or discontinue practices, programs, and/or initiatives based on data, contextual fit, and feasibility.

- Integration of Evidence-Based Practices: The strategic selection and emphasis on using teaching and learning approaches that are proven to be effective through scientifically based studies in academics, behavior, and social-emotional wellness.

- High-Quality Instruction: Curricula, practices, programs, and learning environments are data-driven, aligned with standards, evidence-based, engaging, differentiated, and meet the unique needs of each individual learner.

- Process for Access: Written guidelines for requests for assistance processes and the use of school-specific data-decision rules (utilizing multiple data sources) to identify how students/staff access and exit from advanced tier supports. System-wide progress monitoring occurs to track the proportion of students experiencing success and use of advanced tier supports to modify decision rules as needed (Center on Positive Behavioral Interventions and Supports, 2020).

Systematic Implementation: The use of an intentional process to inform, improve, and refine practices or initiatives that can be scaled and sustained over time, resulting in improvement to valued outcomes.

- Capacity Building: Refers to the development of infrastructure, including the systems, activities, and resources to support the use of evidence-based interventions and strategies for adopting and sustaining innovations (Ward et al., 2015; Ward et al., 2017). More specifically, across:

- Leadership (i.e., active involvement in facilitating and sustaining systems change to support the implementation of effective practice through strategic communication, decisions, guidance, and resource allocation).

- Competency (i.e., strategies to develop, improve, and sustain educators’ abilities to use EBPs and strategies as intended in order to achieve desired outcomes).

- Organization (i.e., strategies for analyzing, communicating, and responding to data in ways that result in continuous improvement of systems and supports for educators to use EBPs and strategies with good outcomes (Ward et al., 2017)).

- Progress Monitoring: Progress monitoring is used to assess students’ performance, quantify a student's rate of improvement or responsiveness to instruction or intervention, and evaluate the effectiveness of instruction using valid and reliable measures (Center on Multi-Tiered System of Supports at the American Institutes of Research, 2023). At the systems level, progress monitoring is used to assess overall intervention effectiveness and improvement.

- Phased Approach: Whether programs or practices are newly selected or being scaled requires strategic planning, resources, and time. Change at the building or division level should be implemented in discernible phases (e.g., implementation science, improvement science) to explore, test, and evaluate the feasibility and effectiveness at a manageable scale and in a timely and effective manner. The systematic study of practices in such a way supports organizations to invest in creating the infrastructure necessary to scale up and sustain over time (Fixen et al., 2005).

Evaluation: A plan for gathering, communicating, and reporting information around key questions to monitor impact, determine potential barriers, and inform decisions related to identified valued outcomes.

- Fidelity of Systems and Practices: Fidelity focuses on how well a program or practice is being implemented, including not only the person who is implementing it, but the quality of the systems in place to support its use, such as the selection, training, and coaching systems. Fidelity data informs and engages all stakeholders (e.g., division staff, instructional coaches, building administrators, and teachers) as new skills are implemented and refined. Results can be used to celebrate and reinforce progress or to provide support and guidance around organizational improvement in specific practices and skills (Active Implementation Hub, 2015).

- Process for Assessment and Evaluation: At each level of implementation (i.e., division and school), assessments are clearly defined and match the uniqueness of each and every learner across academics, behavior, and social-emotional wellness. Schedules and timelines are documented and communicated. Reports are generated to build on strengths while also addressing areas of need at each level of implementation (e.g., level of use, student performance data, differentiated supports) for problem-solving and to inform practice. A range of strategies should be used to collect stakeholders'/partners' perspectives (e.g., community, family, and student) and monitored to create evaluation feedback loops.

- Plan for Dissemination: Annual progress reports are designed and disseminated to inform external stakeholders/partners on the activities and outcomes related to MTSS implementation fidelity (Center on Positive Behavioral Interventions and Supports, 2020).

- Identified Valued Outcomes: Valued outcomes help determine whether the initiative is having the intended impact on students, school personnel, families, and the community. The emphasis on valued outcomes directly informs decisions related to resources, scaling up, and/or improving effectiveness (Center on Positive Behavioral Interventions and Supports, 2020).

Active Implementation Hub (2023, August). AI Modules and AI lessons. https://implementation.fpg.unc.edu/resource/active-implementation-formula-framework-overviews-modules/

Brown, C. J., Stroh, H. R., Fouts, J. T., & Baker, D. B. (2005). Learning to change: School coaching for systemic reform (Report prepared for the Bill & Melinda Gates Foundation). Mill Creek, WA: Fouts & Associates, L.L.C.

Center on Positive Behavioral Interventions and Supports (2020). Positive Interventions and Supports District Systems Fidelity Inventory (DSFI) - Version 0.2. Eugene, OR: University of Oregon. Retrieved from www.pbis.org

Fullan, M. & Knight, J. (2011). Coaches as systems leaders. Educational Leadership, 69(2), 50–53.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M., & Wallace, F. (2005). Implementation research: A synthesis of the literature. Tampa, FL: University of South Florida, National Implementation Research Network.

March, A. L., Castillo, J. M., Batsche, G. M., & Kincaid, D. (2016). Relationship between systems coaching and problem-solving implementation fidelity in a Response-to-Intervention model. Journal of Applied School Psychology, 32(2), 147–177. https://doi.org/10.1080/15377903.2016.1165326 .

National Implementation Research Network (2020). Implementation Stages Planning Tool. Chapel Hill, NC: National Implementation Research Network, FPG Child Development Institute, University of North Carolina at Chapel Hill.

Neufeld, B., & Roper, D. (2003). Coaching: A strategy for developing instructional capacity— Promises and practicalities. Washington, DC: Aspen Institute Program on Education; Providence, RI: Annenberg Institute for School Reform.

Ward, C., Fixsen, D., & Cusumano, D. (2017). An introduction to the District Capacity Assessment (DCA): Supports for schools and teachers. Retrieved from National Implementation Research Network: University of North Carolina at Chapel Hill.

Ward, C., St. Martin, K., Horner, R., Duda, M., Ingram-West, K., Tedesco, M., Putnam, D., Buenrostro, M., & Chaparro, E. (2015). District Capacity Assessment. National Implementation Research Network, University of North Carolina at Chapel Hill.